How to design high quality AI agents for instant productivity, reduced support & training costs

In this issue I give examples of how agents are hugely reducing friction when using new tools, with a subsequent massive reduction in support and training costs.

Welcome new subscribers to Making AI Agents (MAIA), where you can trust me to avoid the hype and share my direct experiences building and researching AI agents.

Also, each issue is designed to have lasting value, so if you missed out on previous issues, feel free to catch up here.

Grab a coffee, settle down, and sit back. This is going to be a good one! Feedback welcome, link at end 👇

Recap: agents vs bots vs scripts: know the difference

Tired: bots 🤖 and scripts 📝

Wired: agents 🕵️♀️🔎 (ok not that kind of agent)

What's the real difference between bots, scripts, and agents? Fundamentally:

AI agents are better than bots and scripts because of their ability to take plain Engilsh instruction to autonomously create their own plans, act on them with tools, reflect and revise.

Just for fun though, tools that run through a fixed set of steps are also called agents. (Actually as far as I can tell, expect every marketing department to bend over backwards to present some kind of “agentic” capability, no matter what it actually does. 🤦♂️)

Agents can be integrated into your existing products in many ways.

I’m seeing agents being deployed at many different levels, a lot of them being an additional method of accessing existing tools.

In-app “micro agents”: everywhere inside tools just when you have a question.

“Product” agents: automating substantial portions of a specific product or app

“Product bundle” agents: an agent that helps you bring together different apps in a product bundle.

“Cross-product” agents: the most common currently: wire together inputs and outputs for different services to create your own flow.

In-app “micro agents” everywhere inside your apps to reduce customer support

This is a really powerful retrofit to ANY product you own right now. It goes like this:

The existing UI offers a chat box users can give instructions to

The microagent carries out the task for you.

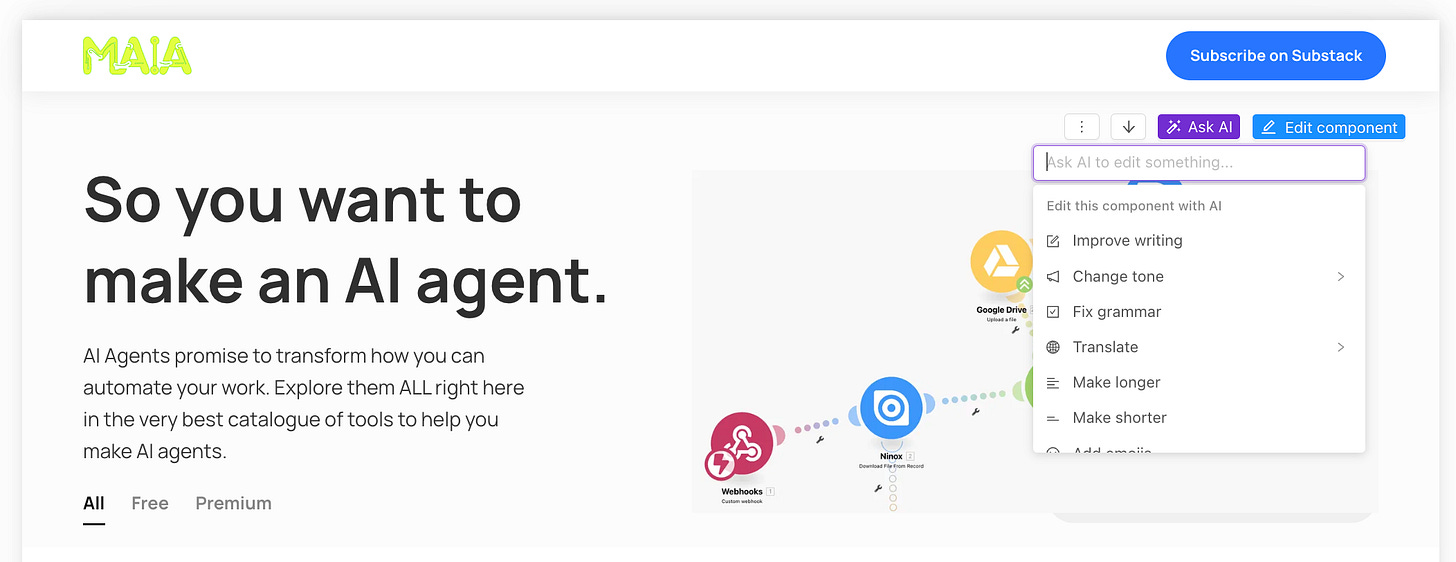

I think this is quite a shift. For example: an “Ask AI” button to help when someone gets stuck in The Unicorn Platform below. I wanted to make sure links opened up in a new window and it made the change in the appropriate section for me:

Product agents: bootstrap instant productivity by letting the user just say what they want to do.

Another pattern emerging in popularity is a chatbot that drives the UI for you from the very beginning of the journey.

Your users don’t need to know anything about how to use the tool, they simply say what they want in plain English.

This means instant productivity and subsequent reduced training costs.

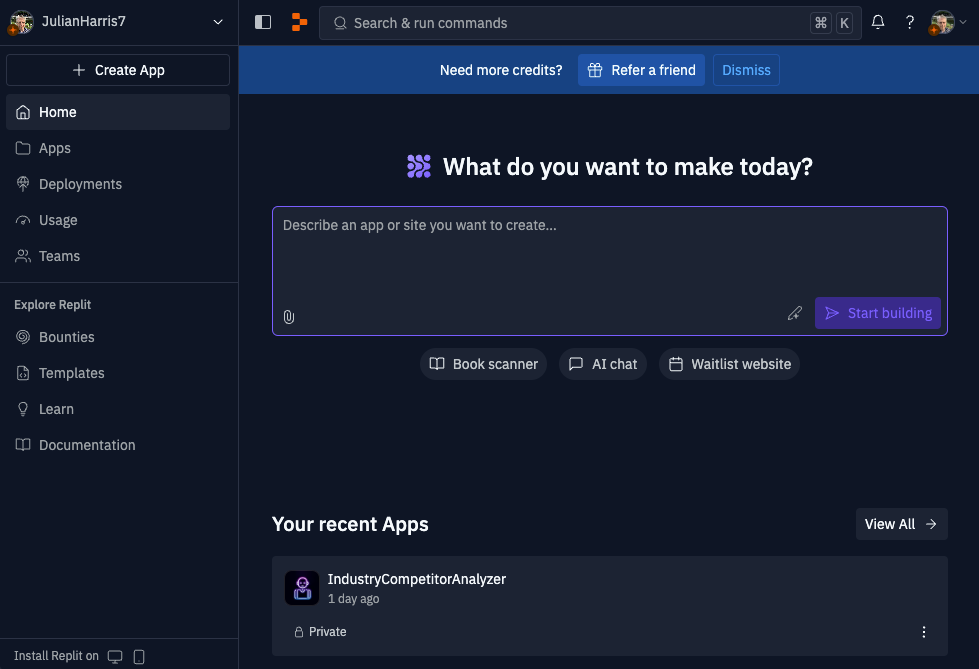

Example 1: Replit Agent

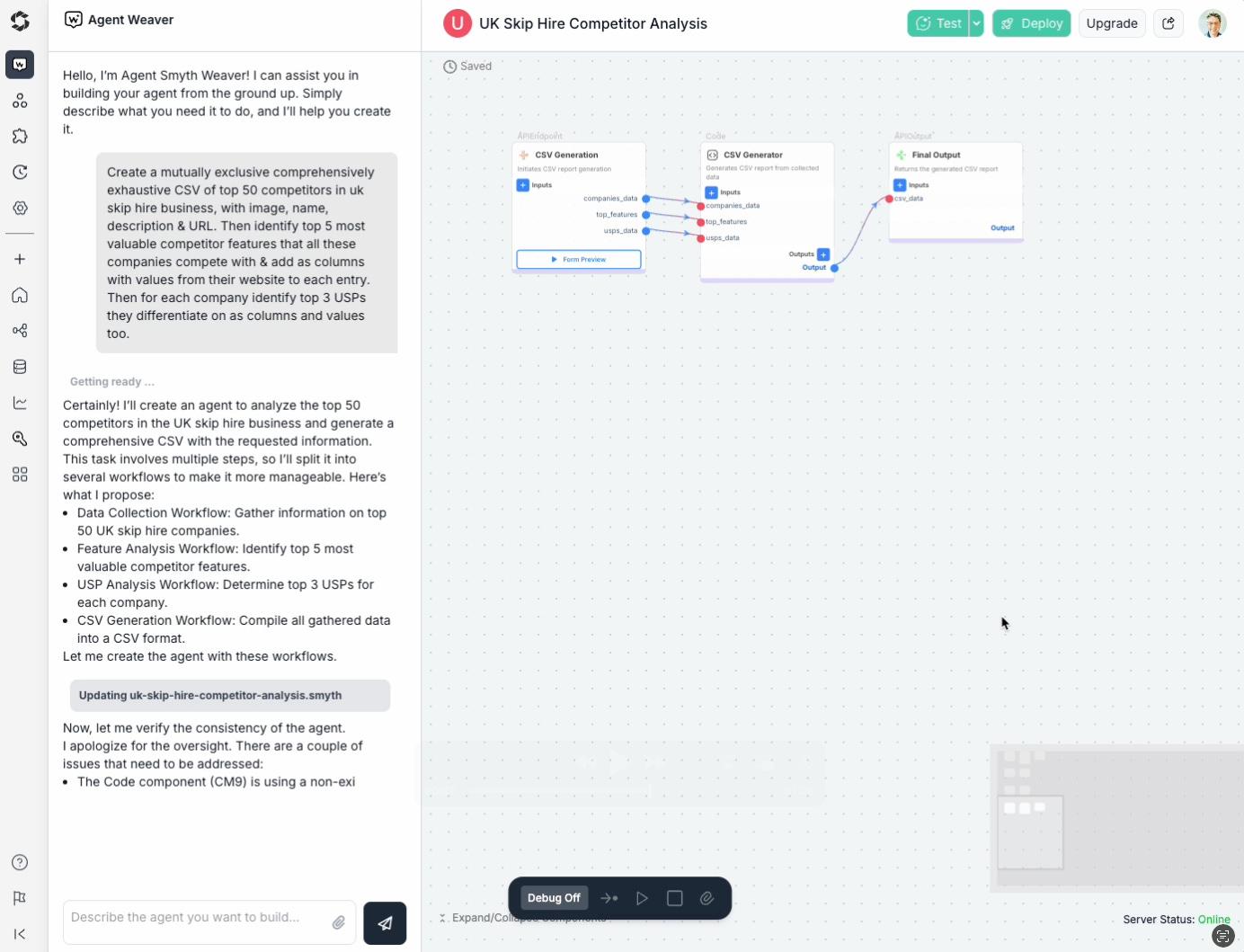

Example 2: Smyth OS agent creator

Smyth OS is a recent discovery for making agents visually. Even better, Smyth OS has an agent to help you create an agent 🤯

Start of flow screen shot:

Smyth OS next screen: all the boxes and lines shown here for the agent were created from my prompt in front of my very eyes. I could get more done if I liked through the chatbot.

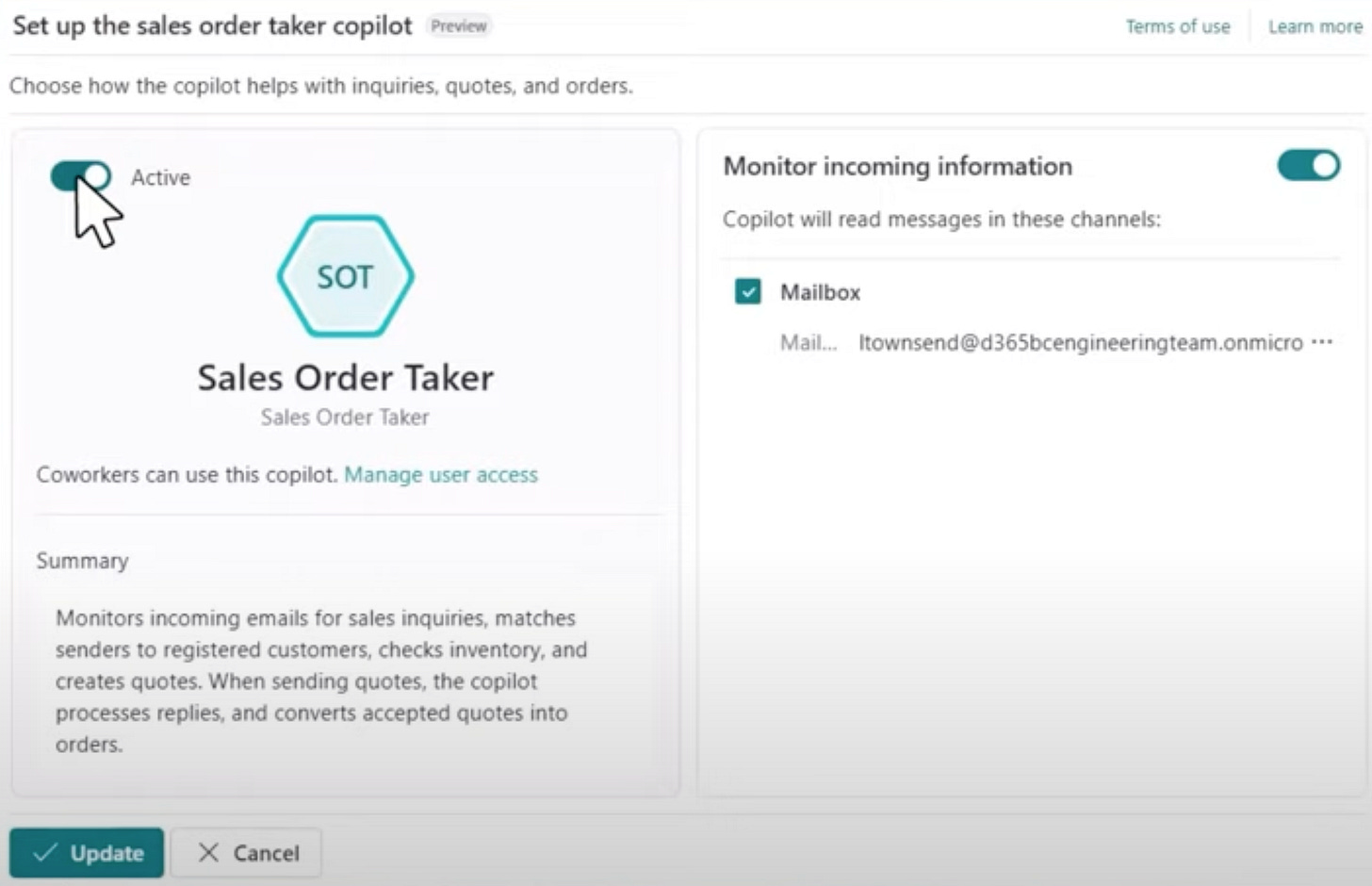

Example 3: Microsoft Dynamics 365 built-in agents

Microsoft added an agent layer on top of very specific product areas of Dynamics 365: sales orders, supplier communications, financial reconciliaton, accounting reconciliation, and time & expenses.

These are:

Super niche agents

Incredibly boring for people who don’t do these tasks daily 🥱

Incredibly valuable for those who DO these tasks daily 🥳💰

Cross-product agents

This is the big one 🧨: cross-product are those that automate business workflows across multiple products from different vendors. Smyth OS is one I showed already that is a promising agent maker tool. Others include n8n.io, Zapier Agents, and Make.com, and there are many others. I’m building a directory of them all: stay tuned!

Future: product bundle agents will increase bundle value

This is speculation 🔮: I expect a standard part of any bundle of products to offer tightly integration agent workflows spanning across the products for customised yet highly valuable workflows, which could become a big selling point for buying the bundle over individual solutions.

DABstep: helping make higher quality agents by finding the right language model

So you want to make an agent. How do you know if it’s any good? The promise of agents is to:

Take plain English instructions from people, to

Do useful things with information they provide, by

Planning, acting on the plan and

Revising it until it's good enough.

Agents do this by using:

A language model (such as GPT-4, Claude 3.5, Llama 3.3), combined with

Tools such as file access, web search, sending emails, voice calls with customers, etc. These are called variously "integrations" or "agent tools".

But language models are hard to trust: they:

Are very expensive

Are evolving rapidly

Can be quite tricky to get consistent quality results from

Can take hours and hours of experimentation to get a “feel” for.

Benchmarks with leaderboards can help accelerate confidence in a language model for your agent:

They offer a broad baseline of real world use cases with reasonable ways to measure the results

They provide clear measures of how one language model performs against the others

They can be extended easily to support your own usage data.

The previous issue of Making AI Agents covered an evaluation benchmark called The Agent Company with 175 agent use cases over a range of every day organisational roles.

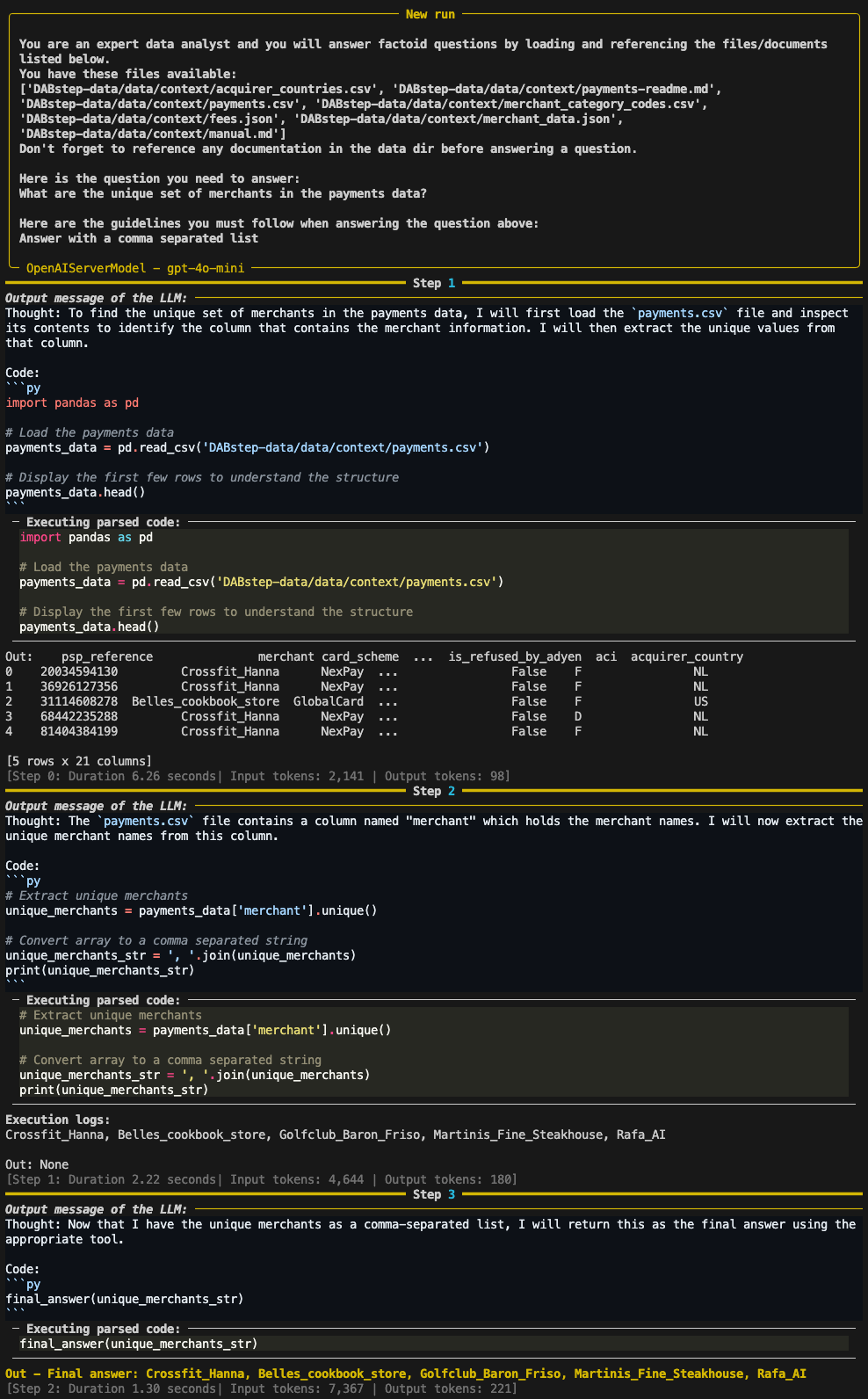

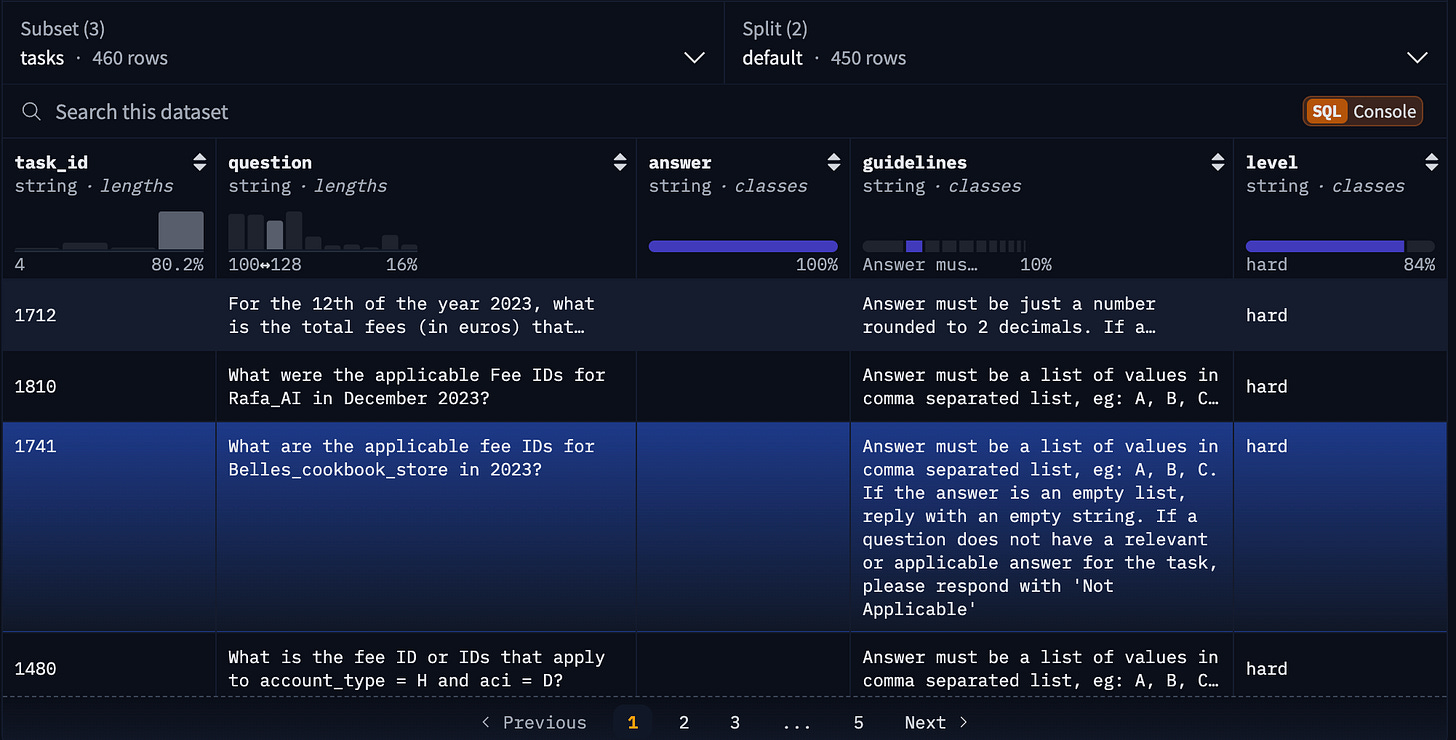

In this issue I dig into another agent benchmark called DABStep by HuggingFace & data specialist partner that is compelling in a number of ways.

DABstep is appealing because:

It has 450 realistic use cases from real world commercial and financial customer queries

It's easy run evaluations (for a programmer)

Extra bonus: to avoid bias, the leaderboard position determined by how the language model performs against unknown questions too

I'm an AI strategist: how can I use DABstep?

DABStep can give you a signal for which language models work best with financial and commercial plain English use cases, and natural language data queries in general. For strategy and planning the simplest way is to bookmark their leaderboard and when looking to build an agent, check out which ones are ranked the best. That'll give you a shortlist of which models to start experimenting with in your agent design.

Also:

Every use case is a plain English question (typically shorter than the long paragraph briefings of The Agent Company) designed to produce a "factoid"

Agent planning required: the steps needed to do the work are left to the agent, and the nature of the questions require the agent to make plans of at least two steps. ("multi-hop" is the term used for this)

Heterogeneous data types: the data is a mix of an operations manual, a very complex set of business rules, and some tabular data that the agents have access to, to figure out how to answer each of the 450 questions

I'm an AI Engineer: gimme the details.

It uses the HuggingFace Smolagents AI agent developer toolkit to create the agents and run the benchmarks

SmolAgents is a bit of a brain-bender:

It's written in python (so far so good)

uses LLM prompts to create plain English plans plans (yep, with you)

… that generates MORE python (oh wait what?)

… which is then executed (yes, no human eval, you have to trust the code is ok)

The result is then evaluated: It could outright fail to run, or the result might not meet the plain English evaluation criteria

Rinse and repeat: feed all that back into the generation loop and try again (with a hard limit on numbers of tries)

Using Smolagents and its novel on-the-fly code generation comes with risks. I'd DEFINITELY not recommend using this on external-facing products, although Python can be sandboxed fairly well these days (see Pyodide for instance)

The DABstep repo contains a Colab Notebook you can use to explore and the code. However it relies on HuggingFace's inference services which were down when I tried them (less 🤗 more like sad ☹️), so I created a pure python version of DABStep that you can use here that only relies on OpenAI. You need HuggingFace API keys (free) and OpenAI API keys (preload with a few bucks).

In DAPstep-py I also tried using various other open weight models through ollama but had to settle at least for now using 4o-mini. I'd have prefered to use Google Gemini but SmolAgents didn't yet have a Gemini connector (though it's really trivial to add).

Recommended non-hypey AI Agent articles

“Deep research” is the new hotness

Deep Research is at its core an sophisticated language model + web search tool agent that have a bunch of steps to provide more intelligent, yet factually-accurate research reports on a topic. Both Google and OpenAI have “Deep Research” products but are behind expensive paywalls ($20 and $200😱 respectively).

As the basic mechanism is fairly well-known, there was an immediate explosion of “Deep Research” open source “clones” just days later. Here are the open source Deep Research clones I found recently:

AI Researcher (mshumer) (one of the original ones)

Open Source DeepResearch (huggingface) / live demo

Replit Deep Research + Browser Use source (gdntn)

More on this in a future issue as it deserves a deep dive. (Note: only one of them uses an actual agent framework 🤔 and guess what: it was Smolagents!.)

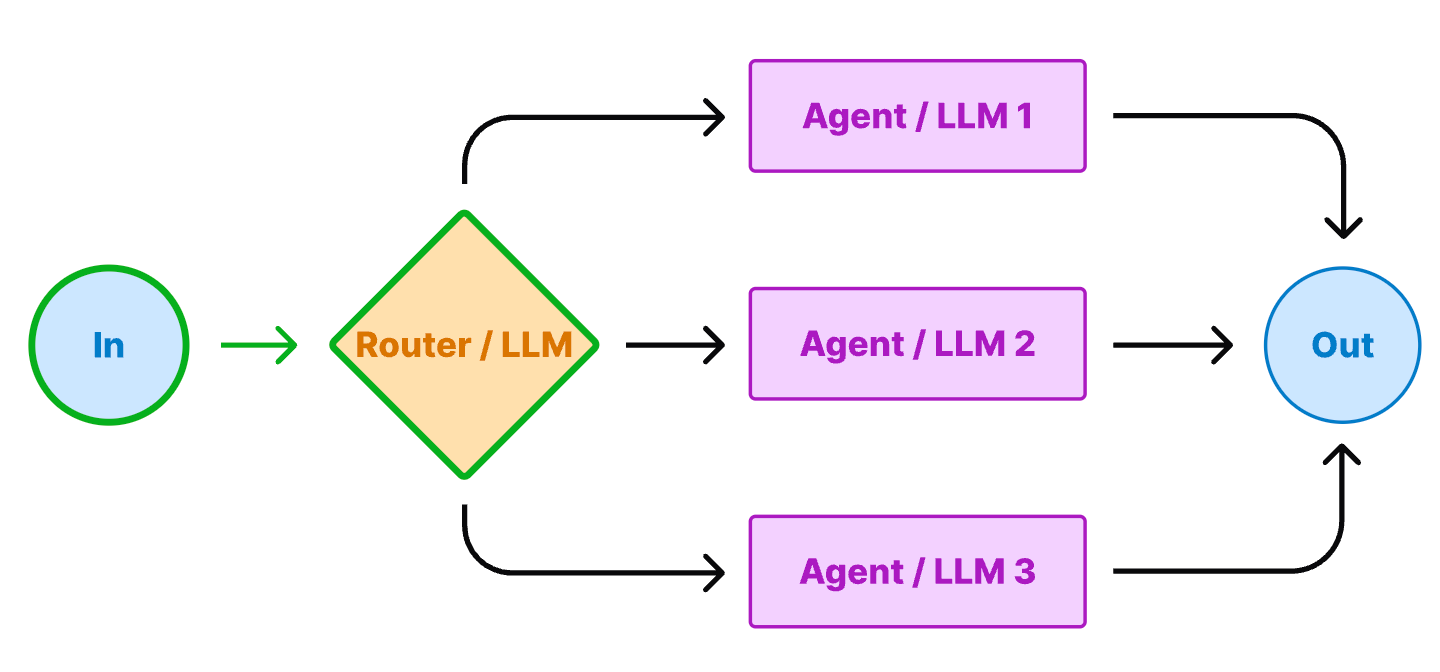

AI Agent workflow building blocks

Useful resource looking at reusable AI Agent design workflow building blocks like orchestrator, parallelization, routing, and chain.

Have your say!

Want this to be the best newsletter ever? I’d love to get your feedback.