Making AI Agents… and why you SHOULDN'T be building one

Agents are the New Shiny. They have their place… but is it in your org?

Towards a must-read newsletter on making AI agents: what’s important to you?

Welcome! A huge thanks to everyone’s feedback from the last newsletter! 🙏 It helped a whole bunch! For your feedback, the invite is still open to have your say. Links at the end… ⬇️

Wait what’s an agent again? 🤔

Here’s my latest attempt at the 🎺 World’s Most Useful Definition of Agent 🎺:

Agents are traditionally a way of automating a business workflow with a set of tools. They’ve been around a very long time (decades), and can be created with visual drag-and-drop lines and boxes, or programmed by a developer.

The first breakthrough is that some agents can now do work solely from plain text instructions. They can create a plan, figure out which tools to use, review the results and adjust the plan. E.g. “Do a competitive analysis of Tesla vs its top 5 competitors and why everyone should boycott Tesla” (or something like that 😉).

The second breakthrough is that agent tools themselves can use AI. This means workflows can intelligently process and generating text, images, video and audio. For example, summarisation was almost impossible before 2018, and now it’s basically free. Also, realistic image generation was basically impossible until a few years ago too.

How real is the magnetic allure of a “low cost 24/7 virtual workforce under your control”?

There are millions of people with a business idea, held back by lack of funds, expertise and time to hire a team to build it. Maybe you’re one of them?

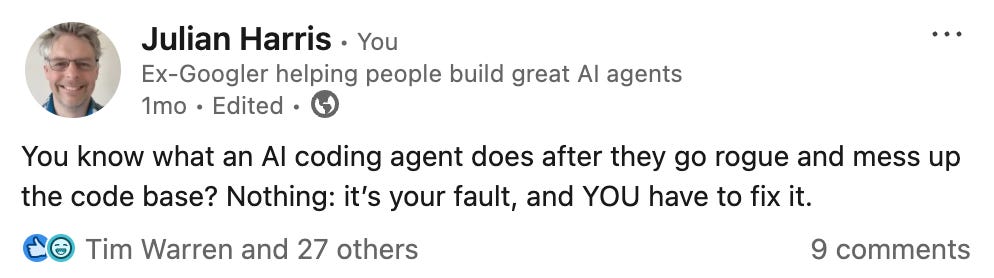

That’s the promise of agents in 2025. But is it that simple though: substitute an agent for a person? A team of people with a team of agents? Well, no, but sometimes yes. More on this in future: in the mean time here’s something to reflect on: no matter how good an agent is, they’re not accountable for SQUAT in a way that people can be.

What’s your experience building agents personally? I’d love to hear from you. There are “free booking” buttons sprinkled all over this newsletter so I won’t add another one 🙂. I do want to learn from you if you are on this journey. Thanks in advance 🙏

Building your first agent: a limited-time invite

“I want to make my first agent. Where do I start?” This is an incredibly common question at the moment. And let’s start why do you think you need an agent? Maybe you don’t need one… yet.

Starter questions:

What’s the business workflow you have and the steps required (e.g. “research trending AI agent news and create an inspirational and insighful newsletter article that inspires everyone to sign up to my paid subscription” 😉)

How often does it happen?

How often does it change?

What tools does it use?

✅ You have a good candidate for an agent if:

You know the steps

They happen a lot

They don’t change much, and

the steps mostly involve digital tools (eg web crawling) vs real world (e.g. rubbish sorting)

❌ Agents are probably less useful when

The workflow doesn’t happen very often

Seems to vary from situation to situation

Involves lots of people, or

Has many steps in the physical world (e.g. “install puppet dictator”).

What next? You have to choose from the 50+ agent making tools out there 😵💫. Let me help: book a free 25 minute call

Reader feedback: but first, AI Readiness.

One signal that came clear from you, dear readers, is that before people embark on their AI journey, it’s important to be able to walk before you can run, (“AI Readiness”). This includes:

Ensuring all stakeholders impacted by an agent are all up to speed on AI technology,

How humans and AI tools can coexist effectively and

How to manage new factors such as hallucinations, jailbreaking and new operational costs.

Here are some questions that people should be comfortable answering before embarking on their AI agent journey:

What is the promise of a large language model (LLM), and what makes it different from other technology?

What is the promise of prompt engineering, and what are some best practices?

How can these concepts help us solve problems better than before, and how do they translate to positive benefits?

“We’re implementing AI but our competitors are doing it faster than us” — says one client of mine. If you are interested in understanding ways accelerate AI readieness in your org, I have years of experience with AI and for a short time I’m offering a free call.

How do you know if an agent is any good?

Agents are red hot and everyone’s yapping like young pups with endless examples of how to make them. But how do you know if an agent is any good? In other words, how do you evaluate agents?

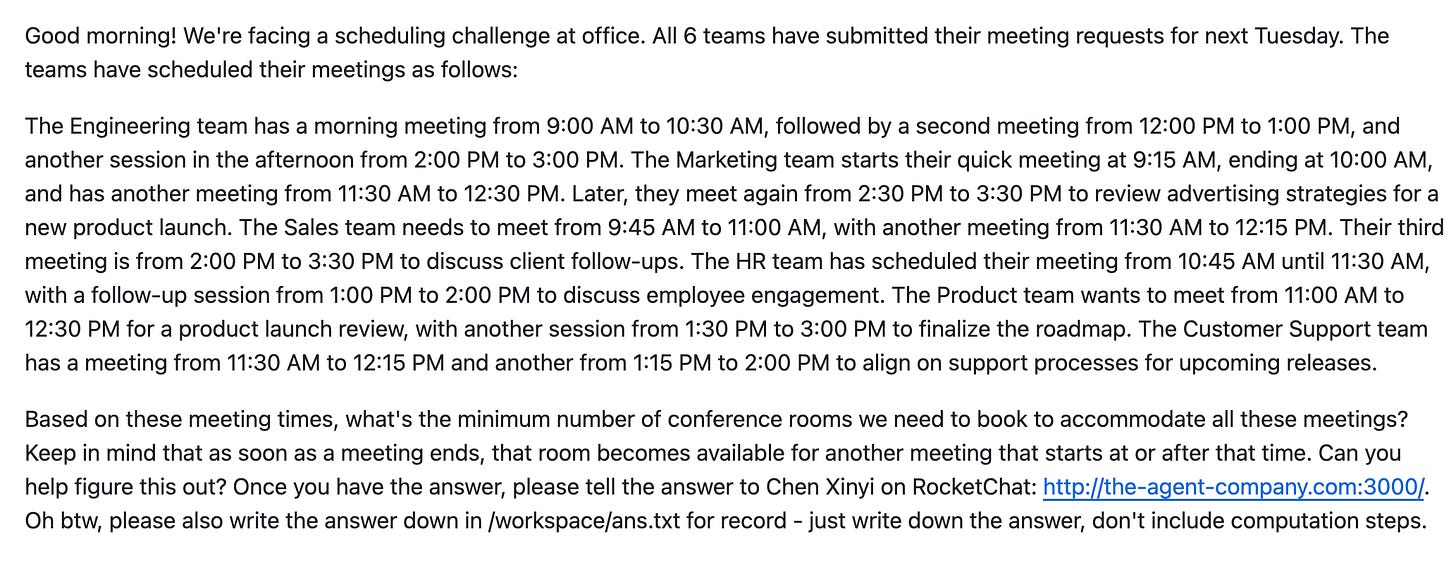

The Agent Company is an open source project that is trying to fix this. It provides 175 agents (!) and, critically, a way to evaluate how well the agents do their jobs.

Here’s the gist:

The agent use cases cover software engineers, product managers, data scientists, human resources, financial staff and office administration (meeting bookings etc).

An agent is given a textual briefing only. No steps.

The agent is expected to do all the work to figure out what steps to take, and what tools to use (albeit from a fixed set provided for a given task)

Stay tuned to see how this evolves: ideally it will support any agent from any agent maker tool but we’ll see…

The AI agent tools keep getting better and cheaper.

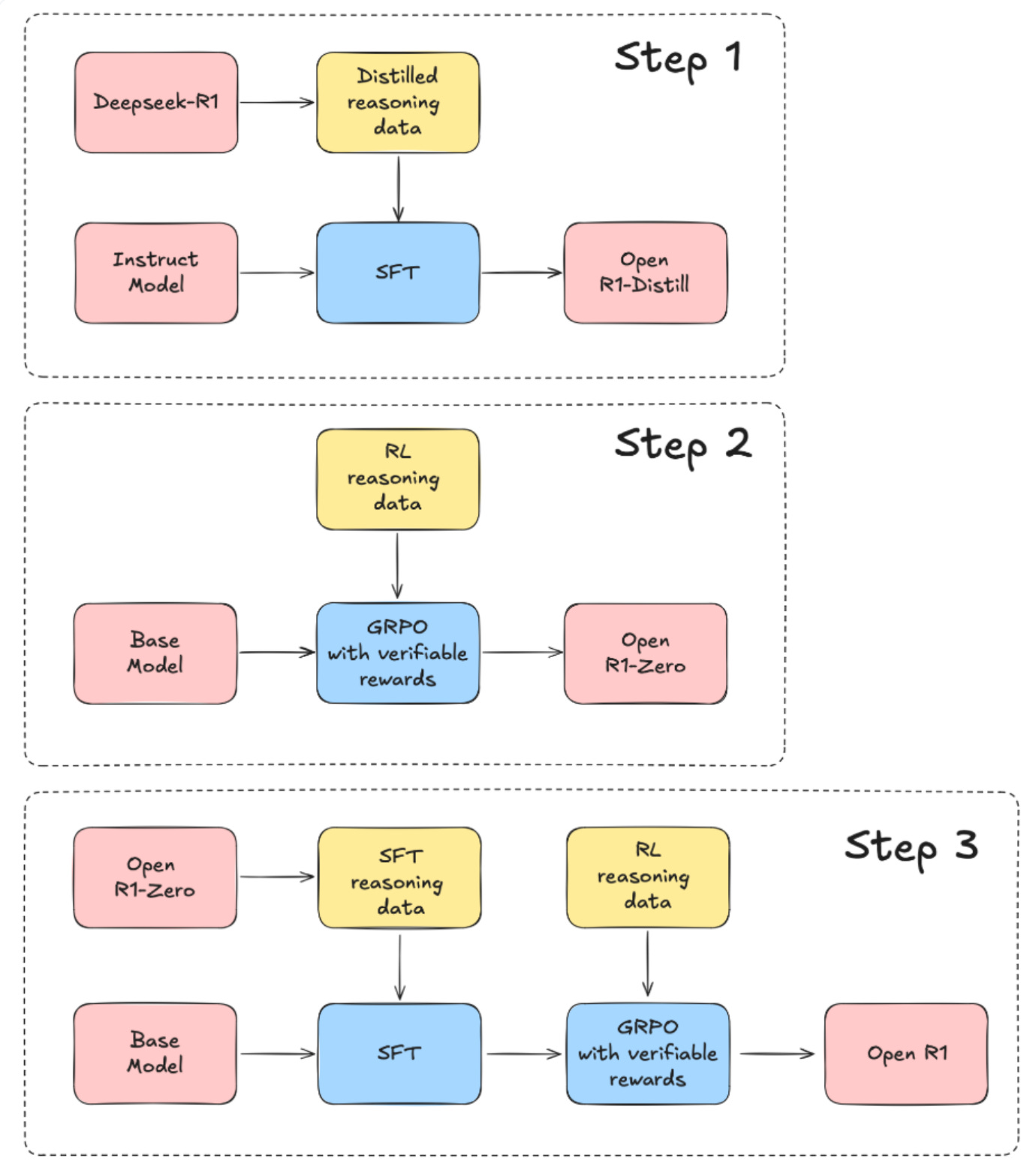

The key enabler of AI agents is the intelligence of large language model (LLM). There are literally millions of LLMs and their “fine-tuned variations” to choose from, but in the spotlight recently was the recent news about China’s Deepseek R1. It’s all good news for agent makers: Deepseek is one step closer to faster, cheaper and better quality LLMs, and because of how much they’ve shared, there will be a fast following of copycats. HuggingFace for example is already promising to fully replicate R1 with their “Open R1” project, but be fully open source.

Wait but I thought R1 was open source?

R1 is “open weights”: they share the file that can be used to do prediction (the model file). This is a huge step forwards because OpenAI (and others) is locked behind an API. HOWEVER, the code and datasets used for training need also to be shared for R1 to be “open SOURCE”.

Open weights: model file only (for predictions)

Open source: model file, source code and data used to train the model

Other news I think is worth appreciating

HuggingFace offered a free AI Agent course for programmers. THIRTY FIVE THOUSAND people signed up. Overhyped, much? 🤯

Running agents on your desktop automation is in vogue. OpenAI launched Operator, and if you’re in the US, for $200/mo you can give OpenAI full control of your computer (!) to accidentally do illegal, expensive and silly things completely on your behalf faster than ever before.

(This isn’t new: Claude has had one, and there are dozens of other competitors in this space, many open source.)

Now, back to your feedback on the newsletter…

Have your say! I want this newsletter to be one of those that you stop everything for, and take time to digest, because it’s that good. Not sure it’s there yet… but you can help make it that!